I had the absolute privilege of attending the Big Ancient Mediterranean Conference (#BAM2016) this week. The remarkable projects, enthusiasm for all things digital, and congenial atmosphere was inspiring. Now that the conference has ended, I think it is a good time to organize my thoughts, and perhaps point out some of the common themes that particularly struck me.

I had the absolute privilege of attending the Big Ancient Mediterranean Conference (#BAM2016) this week. The remarkable projects, enthusiasm for all things digital, and congenial atmosphere was inspiring. Now that the conference has ended, I think it is a good time to organize my thoughts, and perhaps point out some of the common themes that particularly struck me.

1) Our projects are ready talk to each other. This was one of the most exciting revelations of the conference. Many of the digital humanities projects and initiatives represented here not only offer their data in downloadable format (.csv files, JSON dumps, etc), but also feature feature-rich APIs. Even if we are not quite yet to the point where we are using the same meta-data / data standards (more on that later), the use of APIs with permanent URIs allows our data sets to meaningfully interact. The work of Pelagios creates an excellent medium to facilitate such communication, and opens up our data to initiatives that are not limited to studies of the ancient world.

2) Users, users, users, users. We had some spirited and fascinating debate about who the audience is for digital humanities projects, and if it is even possible to create an application that can be effectively used by different audiences (experts, the general public, grad students, etc). I fall squarely on the side of the idea that we engage with multiple audiences by the very nature of a freely-accessible online platform, but our debate revealed a fundamental design question that is often not explicitly addressed: Exactly *who* is a digital humanities project for? Although I may differ with the voices questioning the multi-audience approach, I certainly agree with the position that we need increased usability studies and more robust user information. It is not enough for us to create DH projects that answer our individual questions for ourselves – we need to understand how to communicate with an audience which is used to the visual literacies of web and is less familiar with the conventions of scholarly communication derived from a print medium. The sample edition of Calpurnius from the Digital Latin Library (http://digitallatin.github.io/viewer/editio-2.0.html) is a great model – it captures the information of a textual apparatus free of technical jargon, rendering critical information to a wider audience without a loss of scholarly rigor.

3) Uncertainty. A corollary to the discussion around users is the question of representing uncertainty. There was an interesting question of why we should recognize fuzzy data at all: if an application is directed solely at an academic audience, is it not correct to assume that our users implicitly know that any data or representation of the ancient world is somewhat problematic, and therefore have no problem consuming visual representations that ignore the idea of uncertainty entirely? As I think that our projects need to communicate with non-academic audiences (and indeed academics who may not be as familiar with the inherent uncertainty of the ancient world), I see a very real need to represent the imprecision and uncertainty of our data. Almost all of the projects at BAM grappled with fuzzy data, whether that was geo-spatial (location, assignment to a place), textual (uncertain letter forms, unclear manuscript tradition), or interpretive (multiple archaeological reconstructions, the placement of garrison soldiers at a specific community). Almost every project dealt with uncertainty in a way that reflected the scholarly tradition of their subject area, like placing notes in an apparatus, or describing fuzzy data through text. I see a critical need to establish a common meta-data vocabulary that can, at the very least, alert users (both human and computational) to the presence of uncertainty in our work. I also see room for a common visual literacy for representing uncertainty in maps, social networks, or other visualizations, which is a far more complex issue.

4) Metadata and documentation. For example, even if it proves impossible / impractical / or undesirable to create a common visual literacy surrounding uncertainty, we need to implement a common way of indicating and describing fuzzy data that can be computationally consumed. This returns to my first point: our projects can now talk to each other through computational agents, but we must agree on the vocabulary governing that conversation. Alignment with Pelagios will help in that regard, but I think more attention needs to be paid at all levels of DH projects to metadata standards. For DH projects in the ancient world, the ontology for Linked Ancient World Data offered by LAWD (https://github.com/lawdi/LAWD) should be a staring point.

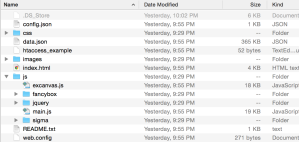

Much like the slow, often tedious process of generating metadata, creating documentation for DH projects is often overlooked. From comments in code to capturing the design decisions and the entire creative process, DH documentation needs to go beyond the narrative of the research question and capture the entire creative, intellectual, and industrial process of a DH project. The suggestion to look to the hard sciences foe guidance in this process is a fruitful place to start.

5) The use of open-source repositories and the continued importance of institutional support. Most of the projects at BAM had a presence at GitHub, and there was some very interesting discussion around the practicality and usefulness of a non-profit, academically oriented alternative. This debate had as a background the reality that GitHub and other free services are currently a critical component of our work, as many DH projects operate on a shoe-string budget and are dependent on largess from an institution or grants. Such funding is often uncertain; Pleaides, one of the most exemplary projects at BAM, has a 50% success rate at securing NEH funding. For smaller projects this rate may be even lower; some participants indicated that the reward to work ratio of grant applications is not attractive for smaller projects.

There is some good news though, as many institutions have expressed growing interest in the digital humanities as a field. As a digital humanities community we need to build on this interest with a push for institutional backing. The University of Iowa clearly demonstrated the excellent outcomes of a group that is both dedicated to digital humanities and able to provide hosting, archiving, and other technical support.

6) The continued need for face-to-face gatherings. While we have many electronic forums for communication (Twitter, Slack, IRC, site forums, etc), there is still something special that happens when DH scholars are brought together for several days, freed of other distractions, and think about the same issues as a group. For me, the headspace of a conference is entirely different than using Skype in my office; my other projects and papers are out of sight, (largely) out of mind, and my focus is squarely on the discussion.

7) Release the tweets. One place where documentation is somewhat overlooked is at conferences like BAM. Many conferences generate an end-product like proceedings, which while valuable, can not capture the conversation that surrounds each presentation. The incredible use of twitter by BAM attendees, and the use of storify to capture those tweets, can serve as a model for other conference proceedings. Conference organizers should establish an “official” twitter tag, advertise it widely on social media, and ensure that a conference venue offers free wifi-access to the attendees. This expands out the reach of the conference in real-time to attendees and remote presenters who would otherwise be unable to participate in the conversation. A critical component to this is also archiving – for BAM, the use of storify (part 1, part 2) and the support of Iowa libraries ensures that there is a searchable account that can be referenced of the conference and the wider conversation it sparked.

The BAM conference generated a lot of intriguing conversation and displayed a host of excellent projects. If this kind of interest, scholarship, and congeniality can be maintained, the future of DH is bright indeed.

You must be logged in to post a comment.